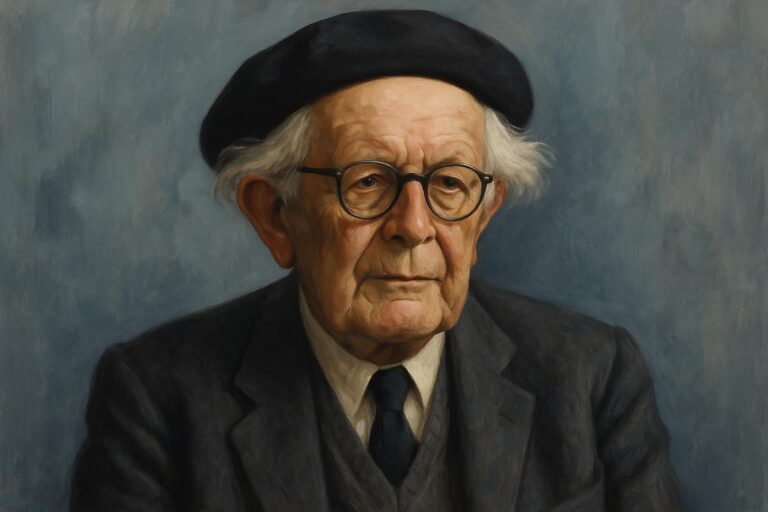

B.F. Skinner: Life, Work, and the Development of Operant Conditioning

Quick Summary

B.F. Skinner was a major figure in behavioral psychology, best known for introducing operant conditioning, a theory explaining how behavior is shaped by its consequences. Through controlled experiments using devices like the Skinner Box, Skinner demonstrated that actions followed by reinforcement are more likely to be repeated. His work influenced fields ranging from education to therapy and laid the foundation for applied behavior analysis.

Early Life

Burrhus Frederic Skinner was a pioneering American psychologist who helped shape modern behavioral psychology. Born in 1904 in Susquehanna, Pennsylvania, Skinner originally studied English literature at Hamilton College with the goal of becoming a writer. After becoming disillusioned with fiction, he shifted his focus to psychology, inspired by the work of John B. Watson. He later earned his doctorate from Harvard University in 1931, where he would conduct much of his research. [1][2]

Focus on Behavior and Learning

Skinner was influenced by the behaviorist ideas of John B. Watson, who argued that psychology should focus only on observable behavior. Adopting this view, Skinner rejected introspection and emphasized the role of the environment in shaping actions. This perspective laid the groundwork for his later development of operant conditioning, a learning theory focused on how behavior is influenced by its consequences.

Development of Operant Conditioning

Inspired by Edward Thorndike’s Law of Effect, Skinner proposed that behavior is influenced not just by preceding stimuli, but also by the consequences that follow it. He introduced the term “operant” to describe voluntary behaviors that act on the environment to produce results. This concept became the foundation of his most influential contribution: operant conditioning.[3]

Operant conditioning is a learning theory that explains how behavior is shaped by its consequences. According to Skinner, reinforcement increases the likelihood that a behavior will occur again, while punishment reduces it. These ideas formed the basis of what would become a central framework in behavioral psychology.[4]

The Skinner Box and Experimental Methods

To test his ideas, Skinner developed the operant conditioning chamber, more commonly known as the Skinner Box. In this setup, animals such as rats or pigeons were placed in a controlled environment where they could press levers or peck keys to receive food or avoid mild shocks. The box allowed Skinner to manipulate conditions and observe behavior with precision.[5]

Summary of Experimental Findings

Skinner observed that animals quickly learned to repeat behaviors that were followed by rewards and to avoid those followed by no reward or mild punishment. For example, a rat placed in the box learned to press a lever more frequently when doing so reliably produced food. These findings provided strong evidence that behavior could be modified through reinforcement and punishment, supporting his broader theory of operant conditioning.

Influence on Behaviorism

Skinner’s work expanded on the ideas of Watson and Thorndike, pushing behaviorism in a more experimental and applied direction. Unlike earlier behaviorists who focused on stimulus-response links, Skinner emphasized the power of consequences in shaping voluntary actions. This approach, known as radical behaviorism, proposed that behavior could be studied without reference to internal mental states.[6]

His emphasis on observable behavior and empirical methods helped establish behaviorism as the dominant psychological perspective in mid-20th-century America. Although later challenged by cognitive psychology, Skinner’s influence remains strong in areas where behavior can be measured and modified.

Applications and Legacy

Skinner’s ideas found practical applications in education, mental health, and even social theory. He developed teaching machines and promoted programmed instruction, tools designed to reinforce learning through immediate feedback. These approaches were based on the same reinforcement principles he studied in the lab.

In his novel Walden Two, Skinner imagined a society organized around behavioral principles, reflecting his belief in the power of psychology to improve human life.[7] He also attempted to apply operant principles to language and communication in his book Verbal Behavior, though this work was less well received within academic circles.

Perhaps his most lasting legacy is the field of applied behavior analysis (ABA), which draws directly from his theories and is widely used in education, therapy, and developmental interventions such as autism support.[8]

Conclusion

B.F. Skinner made a lasting impact on psychology through both his theory of operant conditioning and his broader behavioral outlook. By emphasizing the role of consequences, designing precise experiments, and applying his principles to real-world challenges, Skinner helped shape psychology into a more scientific and practical discipline. His work continues to influence learning theory, therapy, and behavior management across fields.

References

- Bjork, D. W. (1997). B.F. Skinner: A Life. Washington, DC: American Psychological Association.

- Encyclopaedia Britannica. (2025). B.F. Skinner. Retrieved from https://www.britannica.com/biography/B-F-Skinner

- Pierce, W. D., & Cheney, C. D. (2017). Behavior Analysis and Learning (6th ed.). Psychology Press.

- Skinner, B. F. (1938). The Behavior of Organisms: An Experimental Analysis. New York: Appleton-Century.

- Catania, A. C. (2006). Learning (4th ed.). Sloan Publishing.

- Skinner, B. F. (1974). About Behaviorism. Knopf.

- Skinner, B. F. (1948). Walden Two. Macmillan.

- Cooper, J. O., Heron, T. E., & Heward, W. L. (2019). Applied Behavior Analysis (3rd ed.). Pearson.